This is a blog post about a video, which is about new color-conversion LUTs for Apple Log footage from the iPhone 15 Pro and Pro Max (updated from my first set). The video is also a mini-travelogue of my recent trips to Taiwan and Peru. After my explainer video on Apple Log, this one dives a bit deeper into practical LUT workflows, and my state of mind about shooting digital-cinema-grade footage with a device I always have with me.

There’s a lot going on here.

Conflicted in Peru

Me relaxing on vacation. Photo by Forest Key.

I always have a moment when packing for a trip: Which camera to bring? Which lenses? I know I’m always happier when I pack less, like just a single prime lens. But sometimes FOMO gets me and I pack three zooms.

For my trip to Lima, I brought my Sony a7RIV with the uninspiring-but-compact Sony 35mm F2.8 prime. I lugged it around for a few days, but wasn’t really feeling it.

Meanwhile, my iPhone 15 Pro Max was calling to me with its ProRes Log video mode. “I’m 10-bit!” It would say. “Think of the fun you’ll have color grading me!”

I told my phone to shut up, and proceeded to shoot very little with it — or my Sony. Like a squirrel in the middle of the street, drawn in two different directions at once, I creatively froze.

Photography, for me, is made up of a lot of habits, and shooting iPhone video with aesthetic intent is just not yet baked into my travel muscle memory.

Made in Taiwan

A month later, I took a family trip to Taiwan, one of my favorite places in the world. I’d had some time to process my Peru deadlock, and decided to stop judging my own creative impulses, and let inspiration guide me in which camera I pulled out.

I wound up shooting a lot of video.

Me relaxing on vacation. Photo by Josh Locker.

I loved shooting ProRes Log in Taiwan with the iPhone 15 Pro Max. I’d occasionally reach for Blackmagic Camera, but I often just used the default camera app. I stuck my phone (with its crumbling case) out of taxi sunroofs and skyscraper windows, held it above teeming crowds and shoved it between chain-link fences. Seeing the broad dynamic range I was capturing in scenarios from noontime sun to neon-lit nights got me excited about grading the footage later.

It’s exactly the way I feel about shooting raw stills with my Sony, knowing that I’ll be able to go crazy on them in Lightroom. The photographing act is just half of the process.

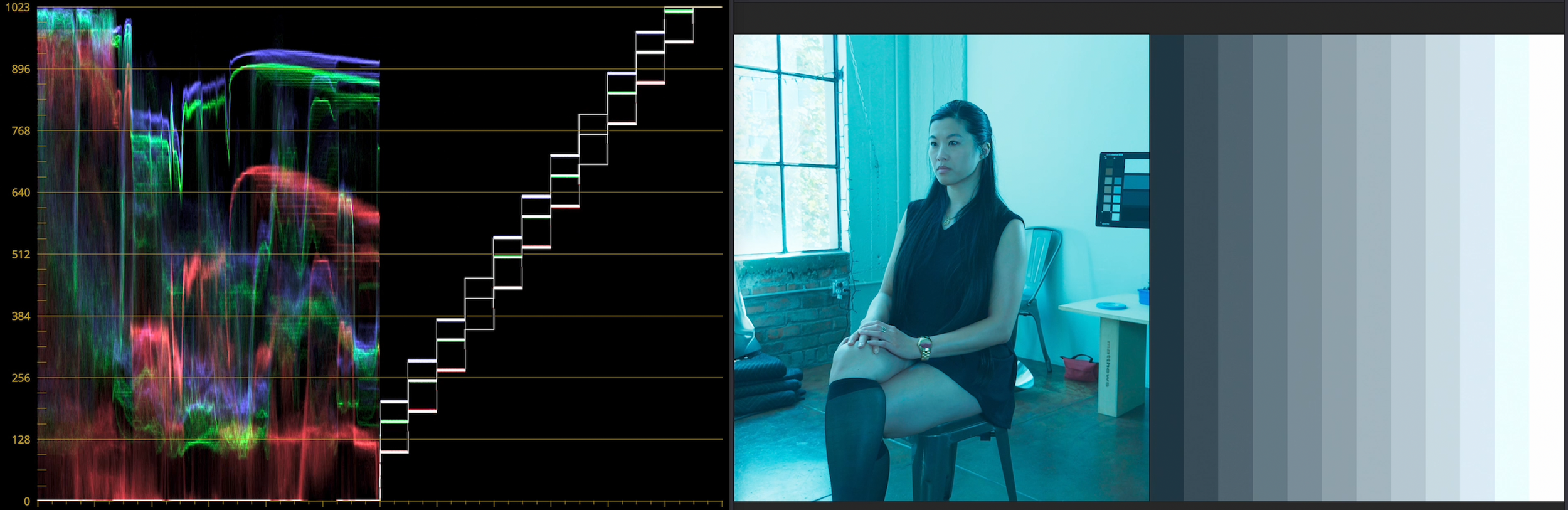

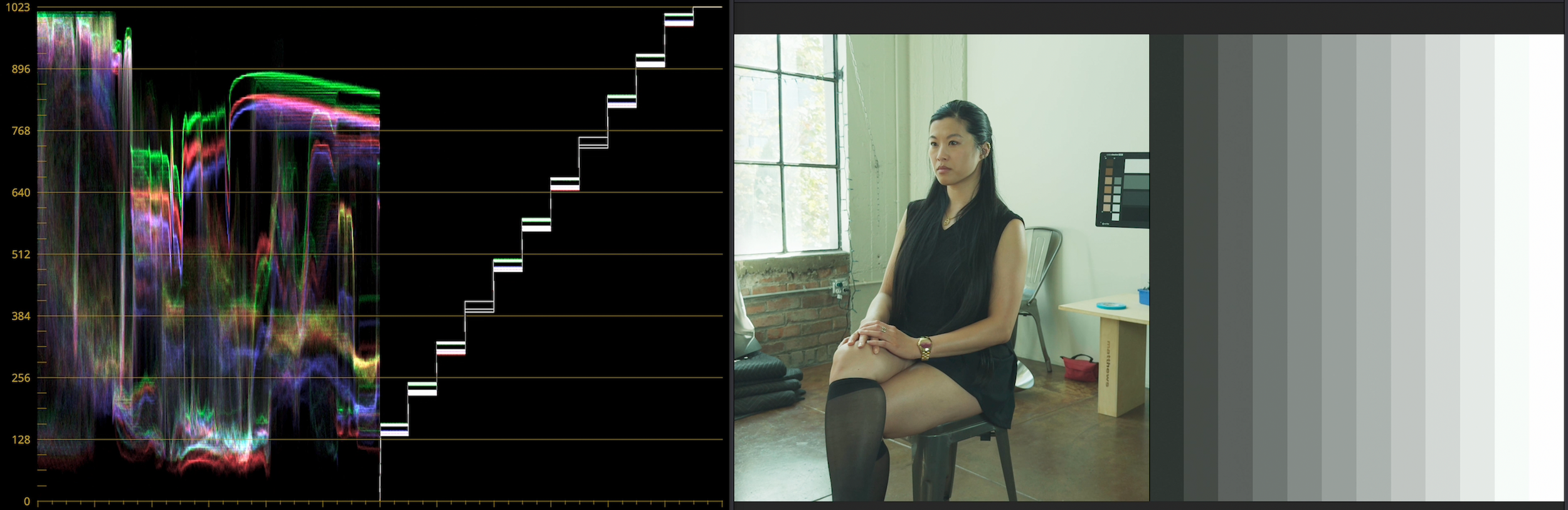

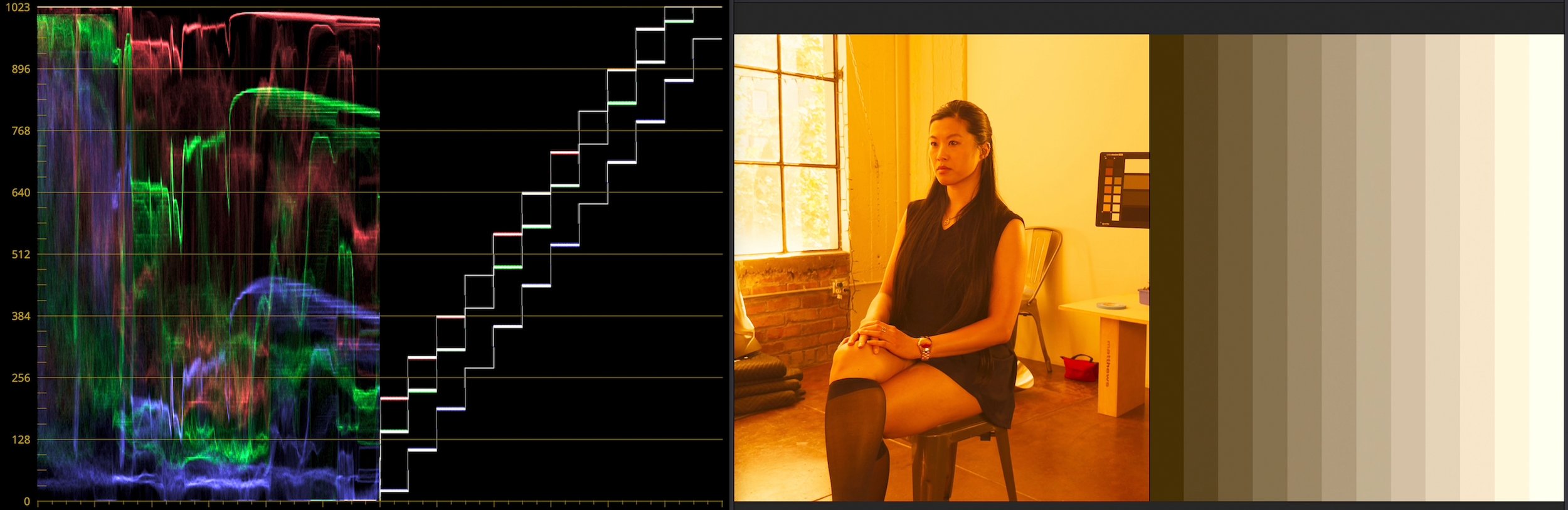

Step through the frames below to see how color transforms a single shot from the video above:

LUTs, Looks, and Magic Bullets

There’s been a bit of a gold rush of people hawking creative LUTs that apply a particular “look” to iPhone Log footage. My day job is, in part, helping make color tools like Magic Bullet Looks, which can do so much more than any LUT. Creative LUTs are great, and by all means support the folks making them — but that’s not what my iPhone LUTs were or are.

The Prolost iPhone LUTs convert Apple Log to various other color spaces, and support three kinds of workflow:

Grade Under a Display Transform LUT

Apple Log is a totally decent color space to work in, so color correcting Apple Log can be as simple as applying Magic Bullet Colorista and choosing one of my Monitor & Grade LUTs. That’s what you see me doing in the video above. Colorista (set to Log mode) does its work on the native Apple Log pixels, and the LUT converts the result to look nice on video.

Many other systems work like this, including LumaFusion, which ships with Prolost Apple Log LUTs.

The key is color correcting under the LUT.

Bring Apple Log into an Existing Workflow

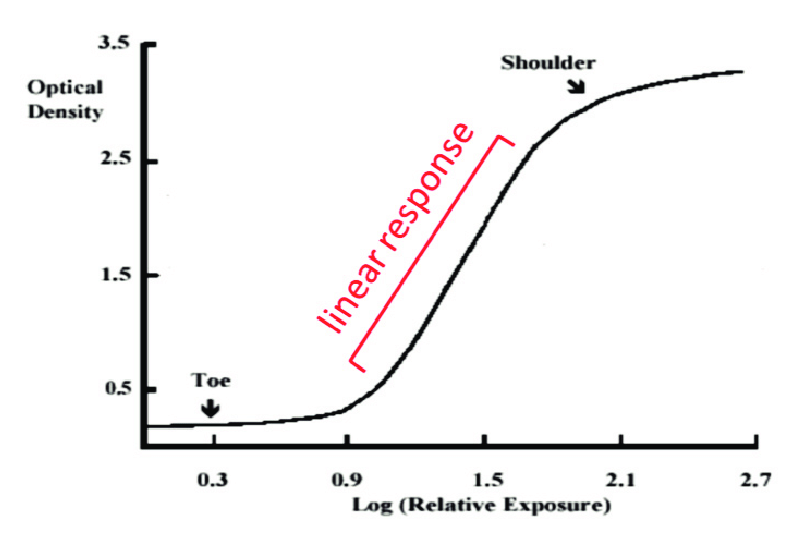

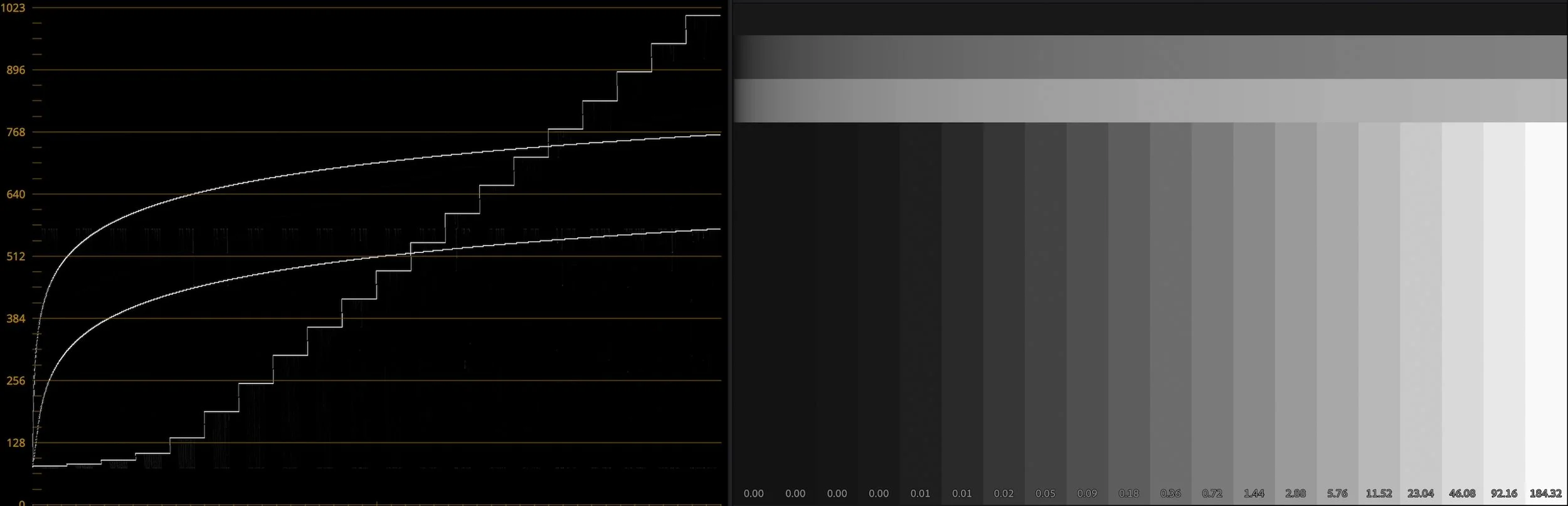

Color work is often done in an intermediate color space. This is usually some kind of wide-gamut, log format, such as Davinci Wide Gamut/Intermediate, or one of the ACES log spaces.

The Prolost ACES LUTs convert Apple Log to either ACEScc or ACEScct log, allowing you to grade your iPhone footage alongside any other professional camera, and output them all through the same pipeline.

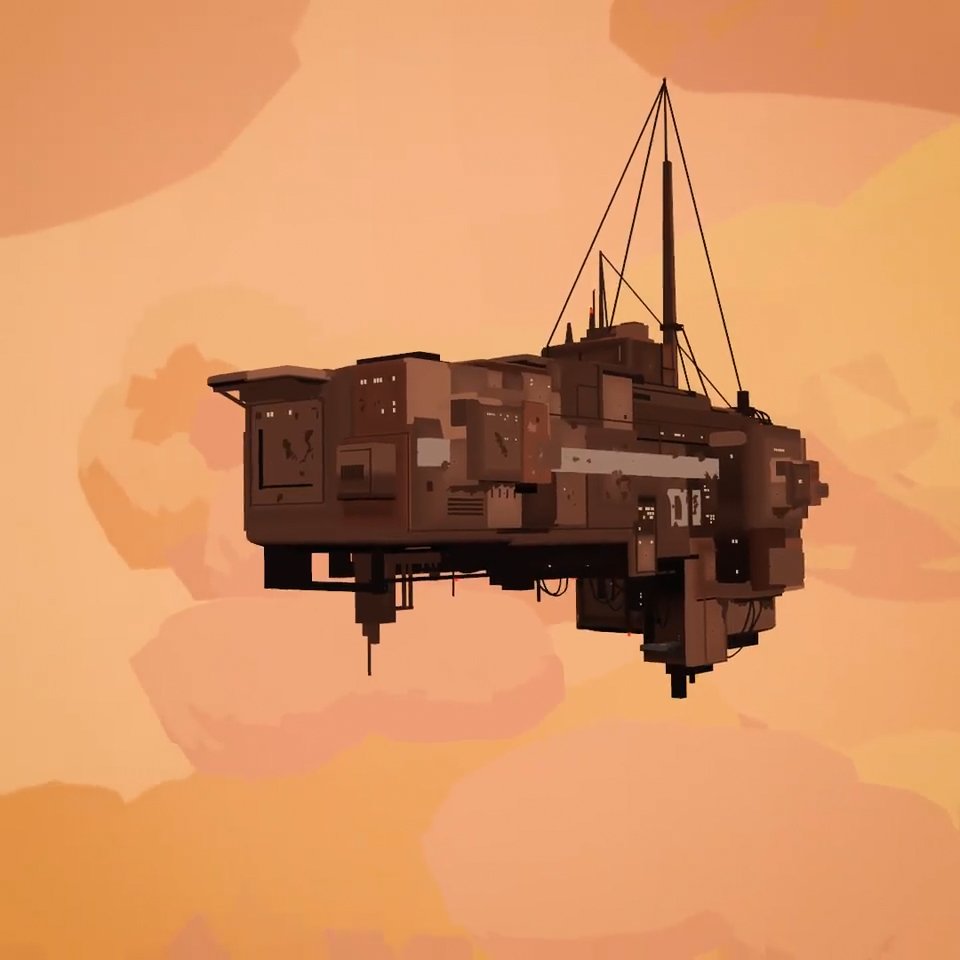

Shooting Through a LUT

The Blackmagic Camera app allows you to load any LUTs you want and preview through them without baking them into your footage. With my LUTs, you can shoot with the same LUTs you grade under later, for a truly professional (no joke!) workflow.

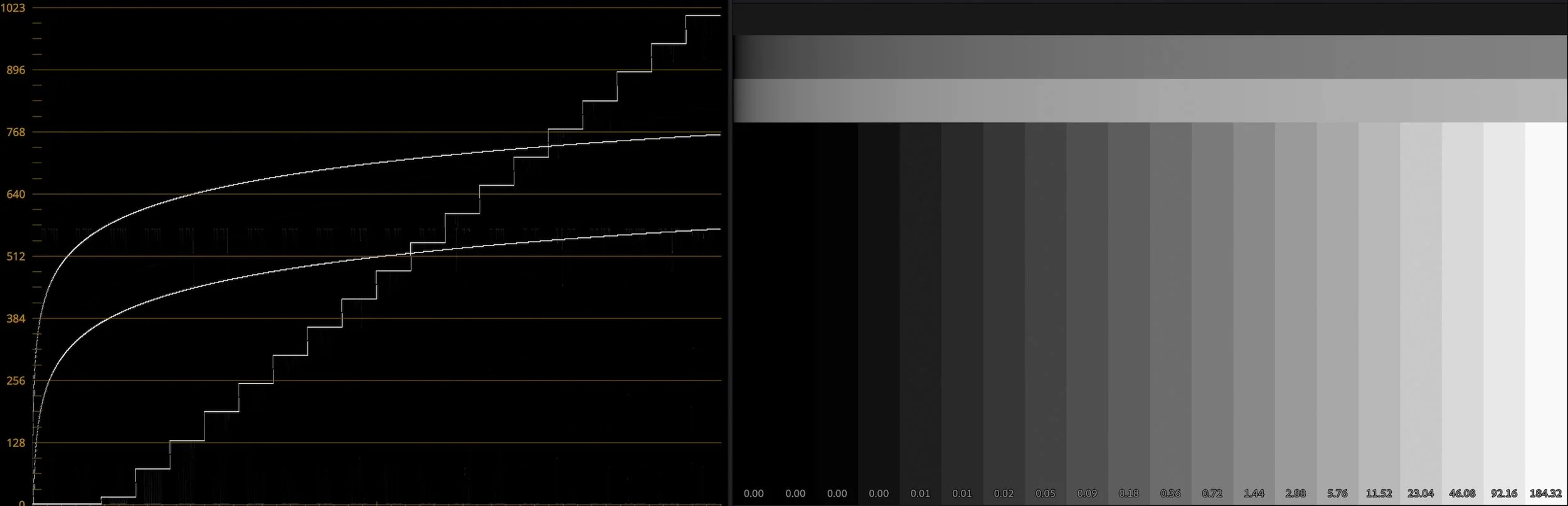

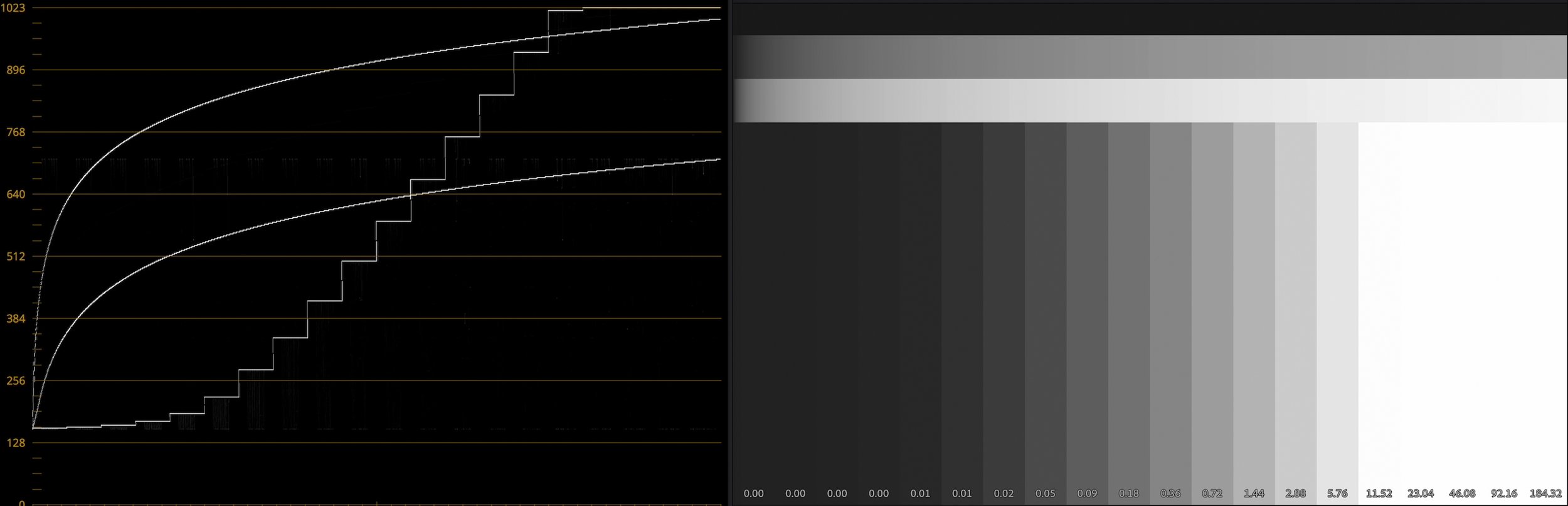

The real stars of this update though are the FC LUTs. They add an informative False Color overlay to the Shoot/Grade LUTs, making sure you always nail your exposure. Watch the video to see them in action. I already can’t imagine shooting without them.

These LUTs work well in Blackmagic Camera or even on an external HDMI monitor.

Adjusting exposure with a variable ND filter until the 18% gray card lights up yellow, for perfect exposure. PL-HERO-FC LUT in Blackmagic Camera.

Gathering Resolve

I’ve never edited a whole actual thing in Resolve before.

As if this video wasn’t enough work already (I shot the A-roll in mid-December), I decided to use it as a personal test-case for creative editorial in DaVinci Resolve. It’s the ACES LUTs that allowed me to incorporate Magic Bullet Looks into my Resolve color workflow.

Maxon just shipped a really nice update to Magic Bullet Looks, with simplified color management made possible by more and more apps we support doing darn fine color management at the timeline level.

So in Resolve, I can use my LUT to convert Apple Log to ACEScc, and then apply Magic Bullet Looks, which can now be set to work in ACEScc with a single click.

The new streamlined color options in Magic Bullet Looks. Choose Custom to get the full manual control.

I can sneak additional Resolve corrector nodes between those two for local corrections. Resolve is great at this, and Looks is great at creative look development, so this is a match made in heaven.

A little face lift.

Then, at the end, I use an ACES Transform node to convert to Rec. 709 video.

Get to the chopper.

An expert Resolve user could replace my LUTs with Resolve’s built-in Color Space Transform nodes, but the LUTs make this process easier and more reliable.

Gear Inspires

Every photographer knows the feeling of lusting after new gear. We know it so well that we remind ourselves constantly that “next camera syndrome” is debilitating, and that “most cameras are better than most photographers.” Gear is not the answer. Go shoot.

There is, however, a counterpoint to these truths: As shooters, we take inspiration where we can get it. And sometimes a new technique, a new locale, or even, yes, a new bit of kit is what provides it.

The key is to listen for that inspiration, and don’t judge it.

Even if it’s coming from your phone.

Jiufen village, Taiwan. Two a7RIV shots stitched in Lightroom.