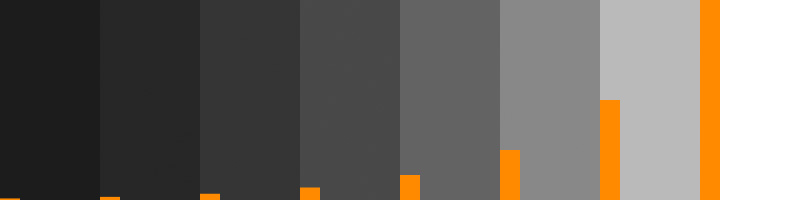

Note that we now have much more room for variation in dark tones. If we have a limited bid-depth available for storing this image, we're far less likely to see banding or noise in the shadows with this arrangement.

Why is this important? As we've already discussed, it is underexposure tolerance that limits our ability to capture a scene on a digital sensor. We can set our exposure to capture whatever highlight detail we want, but at some point our shadows will become too noisy or quantized to be usable. And this danger is exponentially more apparent when you realize that the chip is capturing linear light values. The first thing we do with those values is brighten them up for display and/or storage, revealing in the process any nastiness in the lower registers.

You can think of the orange bars in the first graph as pixel values. When you shoot the chart with your digital camera, you're using half of your sensor's dynamic range to capture the values between the rightmost chip and the one next to it! By the time you start looking at the mid-gray levels (the center two chips), you're already into the bottom 1/8th of your sensor's light-capturing power. You're putting precious things like skin tones at the weakest portion of the chip's response and saving huge amounts of resolution for highlights that we have trouble resolving with our naked eyes.

This is the damning state of digital image capture. We are pushing these CCD and CMOS chips to their limits just to capture a normal image. Because in those lower registers of the digital image sensor lurk noise, static-pattern artifacting, and discoloration. If you've every tried to salvage an underexposed shot, you've seen these artifacts. We flirt with them every time we open the shutter.

Any amount of additional exposure we can add at the time of capture creates a drastic reduction in these artifacts. This is the "expose to the right" philosophy: capture as bright an image as you dare, and make it darker in processing if you like. You'll take whatever artifacts were there and crush them into nice, silky blacks. This works perfectly—until you clip.

The amount of noise and static-pattern nastiness you can handle is a subjective thing, but clipping is not. You can debate about whether an underexposed image is salvageable or not, but no such argument can be had about overexposure. Clipping is clipping, and while software such as Lightroom and Aperture feature clever ways of milking every last bit of captured highlight detail out of a raw file, they too eventually hit a brick wall.

And that's OK. While HDR enthusiasts might disagree, artful overexposure is as much a part of photography and cinematography as anything else. Everybody clips, even film, and some great films such as Road to Perdition, Million Dollar Baby and 2001: A Space Odyssey would be crippled without their consciously overexposed whites.

The difference, of course, is how film clips, and when.

How does film clip? The answer is gracefully. Where digital sensors slam into a brick wall, film tapers off gradually.

When does film clip? The answer is long, long after even the best digital camera sensors has given up.

More on that in part 2. For now, more swatches! A digital camera sensor converts linear light to linear numbers. Further processing is required to create a viewable image. Film, on the other hand, converts linear light to logarithmic densities. Remember how I described the exponential increase in light values as doubling with each stop? If you graph that increase logarithmically, the results look like a straight line. Here are our swatches with their values converted to log: